In the last quarter of 2025, the industry conducted an extensive research study to assess the quality of the most reliable artificial intelligence chatbots used in professional settings. The results revealed striking variations in operational reliability among the top models, underscoring the importance of selecting tools that deliver reliable results rather than simply capturing market share. In the field of AI reliability study, AI is not a flimsy issue. As AI systems are incorporated into routine corporate workflows, companies need to weigh the accuracy, reliability, and uptime against the value of their features and popularity.

What the 2025 AI Reliability Study Measured?

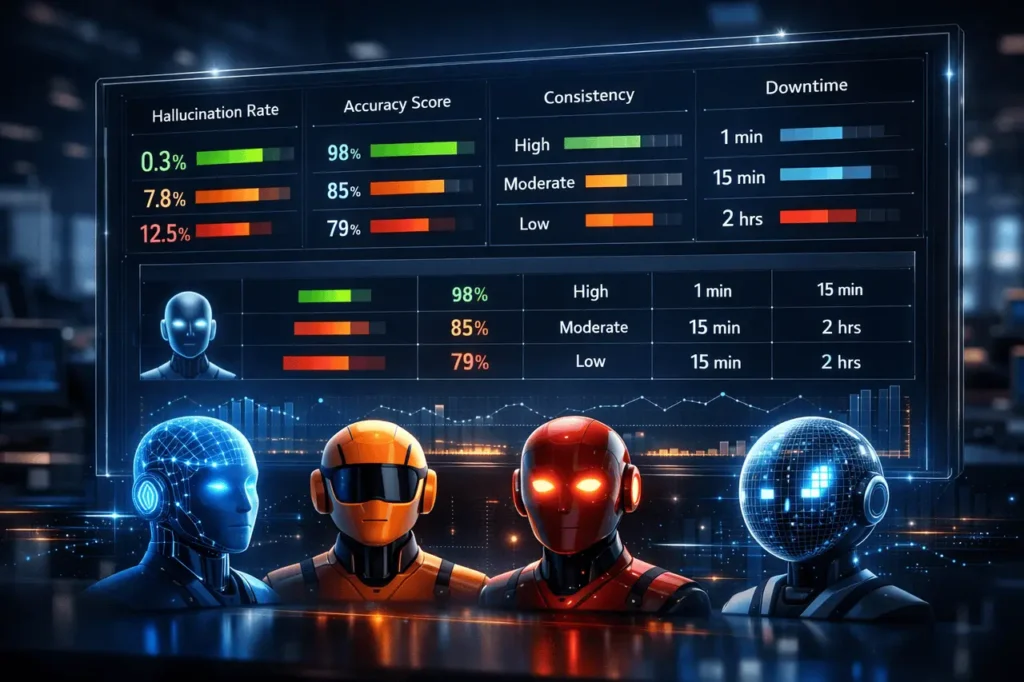

December 2025, AI reliability test, carried out by the industry data aggregator Relum, tested the top ten artificial intelligence (AI) models that generate AI chatbots against four key indicators: hallucination rate, satisfaction of customers, consistency of response, and the frequency of downtime. Each model was assigned a reliability score between 0 (lowest risk) and 99 (highest risk) using these parameters to determine overall workplace quality.

- Hallucination Rate: The frequency with which an AI effectively presents false information as accurate, posing a significant threat to critical business processes.

- Customer Rating: reflects the end-user’s satisfaction with the product’s performance.

- Consistency: Examines the capacity of the model to provide stable results over a variety of inputs.

- Downtime Frequency: Tracks service interruptions or unavailability, crucial for enterprise deployment.

By combining these measures, this study was designed to provide businesses with a clearer understanding of how each chatbot performs in everyday tasks, particularly when accuracy is essential.

Grok Tops the Reliability Rankings

Based on the findings, Grok, the chatbot created by xAI, is notable for its remarkable accuracy and the lowest level of hallucination at only 8% among the models analysed. This means Grok created fewer fake or incorrect responses than its rivals.

In addition to the very low hallucination rate:

- Grok received a high customer satisfaction score of 4.5 out of 5.

- Its reliability score was 3.5, indicating steady performance across different tasks.

- It maintained low downtime (0.07 per cent), indicating technical stability for enterprise use.

When all of these factors were incorporated into its overall rating, Grok achieved a remarkably low score of 6, indicating a low risk for workplace deployment. While Grok’s market share is lower than that of other AI software, its precision advantages make it an attractive option when accuracy outweighs brand recognition.

ChatGPT and Other Competitors: Performance Overview

While specific models are leading in market share and general acceptance, the study identified significant differences in popularity and reliability:

- ChatGPT is the most popular chatbot, but despite its market dominance in the AI Chatbot industry, it recorded a 35 per cent hallucination rate during the study, making it one of the least reliable alternatives for work-related tasks. The high percentage of incorrect outputs accounted for the highest level of risk at 99.

- Gemini Google’s Gemini displayed a much higher hallucination rate of 38%, confirming the notion that models with broad capabilities might struggle to achieve the accuracy of their data at a large scale.

Other models that made the list included DeepSeek and Claude, with varying levels of user satisfaction, accuracy, and stability. For instance, DeepSeek performed well, with a hallucination rate of 14% and high reliability, while Claude and others were in the middle of the risk range.

Why Hallucinations Matter for Businesses?

Hallucinations, or the assured fabrication or misrepresentation of facts, are among the most significant challenges artificial intelligence faces. AI. They can cause operational failures, poor decision-making, and reputational risk when used for customer interaction, compliance reporting, or technical analysis.

In the workplace, chatbots that regularly experience hallucinations could:

- Inflate employees’ information.

- Compromise sensitive decision-making processes.

- Incorporate errors into reports, briefs, or any other content.

Relum’s leadership in product development stressed that 65% of U.S. companies now employ AI chatbots to assist employees in their daily tasks, and that more than 40% of employees are using sensitive company data through these systems, underscoring the need for reliability to be an essential aspect of any strategic plan.

Making Reliable AI Decisions in 2026 and Beyond

As businesses continue to incorporate AI into their core workflows -including client service, legal writing, analysis, and data synergy the choice of model will be crucial. Tools with lower rates of hallucination and higher reliability are less likely to impede business results and more likely to support precise, compliant processes.

Adopting AI responsibly means:

- Evaluation of models based on the accuracy of their models, not just popularity or the number of features.

- Real-world testing of performance in your particular field, in the sense that benchmark results will vary based on the job.

- Monitoring uptime and service consistency, particularly for mission-critical use cases.

The 2025 stability report provides a data-driven basis for these choices, showing that models with a higher level of reliability, like Grok, are not gaining ground through hype but because of quality, where it matters most.

My Final Thoughts

In 2025, the AI reliability study highlights a significant shift in how companies evaluate the reliability and effectiveness of generative AI tools. Precision, low hallucination rates, and operational stability are no longer luxuries; they are vital for efficient and responsible AI deployment. The study revealed that a large portion of the workforce relies on AI daily, and that the majority of users share personal or critical business data. In these environments, insecure results can directly impact legal, financial or reputational risk.

The research also challenges the idea that the most well-known AI software is automatically the most appropriate for professional settings. The models that emphasise truth-seeking behaviour, consistent behaviour, uptime, and consistency could provide greater value over the long term, even if they fall behind the competition in widespread adoption. For companies planning their AI strategy for 2026 and beyond, the message is clear: test AI tools against quantifiable reliability metrics, not just on hype. Making sure the tools you choose are not prone to hallucinations and deliver reliable performance will significantly increase trust, productivity, and decision-making across the entire organisation.

Frequently Asked Questions

1. What exactly is an AI hallucination?

Hallucinations in AI are instances in which a model produces plausible information that is fabricated or factually incorrect. The ability to reduce hallucinations is vital for accuracy in professional environments.

2. What made Grok get better marks than ChatGPT in terms of reliability?

Grok’s training and architecture appear to be grounded in fact, as evidenced by its low hallucination rate, high user ratings for answer consistency, and minimal downtime. All of these factors resulted in a lower overall quality risk rating.

3. Does a lower rate of hallucination mean that a model is more effective?

Not necessarily. A lower hallucination rate suggests greater accuracy in factual analysis; however, other aspects, such as creativity, research depth, or multimodal abilities, are also necessary depending on the usage scenario.

4. Should companies abandon the popular models, such as ChatGPT?

It’s not always. Some popular models offer greater integration and community support; however, businesses must consider reliability requirements. For delicate tasks, precision-focused models might be better.

5. Can hallucination rates change over time?

Yes. Hallucination rates can depend on model training updates, ad hoc training, and deployment context. It is vital to keep track of the evaluation process when models change.

6. What should businesses consider when choosing an AI chatbot to work with?

Businesses should evaluate the quality (hallucination rate) and operating stability (uptime), as well as reliability across tasks, and actual performance during pilot tests that align with business requirements.

Also Read –

Grok Enterprise Vault: Enterprise-Grade Privacy and Security